The Intellectualisation of Online Hate Speech: from the Vernacular to the Conceptual

Team Members in alphabetic order

- Laurie Le Bomin

- Jonathan Hendrickx

- Kristina Herbst

- Mikkel J. Hjelt

- Bart Josten

- Daniël Jurg

- Emillie V de Keulenaar

- Ivan Kisjes

- Magnus Knustad

- Valérie van Mameren

- Cassian Osborne-Carey

- Stephanie Tintel

Contents

- The Intellectualisation of Online Hate Speech: from the Vernacular to the Conceptual

- Team Members in alphabetic order

- Contents

- Summary of Key Findings

- 1. Introduction

- 2. Methodology, data sets and research questions

- 5. Findings

-

- A. The increased 'intellectualisation' of race-related language

- B. 'Race' as an important topic of discussion in right-wing comments and video transcripts

- C. 'Race baiting' has become a key topic in discussions involving the term 'race' in both left-wing and right-wing comments

- D. The ephemeral meanings of the term 'race baiting' -- from the official to the vernacular

-

- An example of an early usage of the term ‘race baiting’, found here in a Billboard Magazine ad from 1943 via Google Ngram

- An example of a ‘mainstream’ definition of the term ‘race baiting’, as found in the Merriam-Webster dictionary dating from 2019

- The Urban Dictinary’s current top definition for the term ‘race baiting’ (January 2019)

-

- F. Uses of the term 'jew' as a marker of radicalisation?

-

- 6. Discussion

- 7. Conclusions: the needs for new analycal methods to dispute ancient ideas

- 8. References

Summary of Key Findings

-

In right-wing channels, the word ‘race’ has become a topic of scientific, intellectual concern, tying back to the language of scientific racism.

-

Accusations of misuse of the word ‘race’ appear to delimit the frontiers of discussions about race between left- and right-wing channels.

-

‘Intellectualisation’ in language associated to ‘jew’ does not take place -- the word is instead linked to different discourses about ethnicity, religion, and political control.

1. Introduction

On the 31st of October 2018, professor Jordan Peterson was invited to the University of Amsterdam by the interview platform Room for Discussion to shed light on “a society in crisis” (Room for Discussion). After having announced the controversial Canadian professor as a guest, an emblematic debate unfolded concerning the platforming of controversial ideas. Opponents of the invitation argued, in an open letter, that Peterson held many pseudo-scientific ideas that should be given a strong oppositional voice when presented within an academic context (Folia). Proponents of the invitation argued, as Peterson himself did, that one should have the freedom to discuss controversial topics that go against the academic status quo, which, according to Peterson, is dominated by left/neo-Marxists and postmodernists (Rebel Media).

Peterson is now referred to by many traditional media outlets, e.g. The New York Times, as “the most influential public intellectual in the Western world”. He started his rise to the public mainstage on the online platform YouTube, where he uploaded his lectures, thoughts, and advices (Brooks). But peterson is merely one of the controversial personas within a rapidly growing online collective known as The Intellectual Dark Web: a group of intellectuals arguing, among other things, about the biological differences between men and women, the toxicity of identity politics, and the problem of political correctness, unanimous in its call for a space that allows maximum freedom of speech and thought (Weiss). YouTube has become one of the main platform for one to engage with the controversial content and discussions, uploaded and shared by followers of the so-called Intellectual Dark Web. As Joe Rogan -- one of the leading figures within the field of lengthy contrarian discussions (currently 4,5 million YouTube subscribers) -- explains,, “people are starving for controversial opinions” (qtd. in Weiss). As Lewis points out, YouTube seems to have become a market for ideas that are considered to be too controversial to be discussed by the academic establishment (15).

From the vernacular back to the conceptual

Most members of the Intellectual Dark Web would argue that their goal, being an eclectic group of prominently trained academics, is to provoke thought in the pursuit of Truth in a society that, in their eyes, has become too politically correct. However, while they explicitly argue against the idea that their message is discriminatory, they seem to reinvest in concepts originating from the same intellectual sources as terms associated with vulgar and explicit hate speech. While the latter is generally defined as an ensemble of actively offensive, derogatory and violent language targeting the ethnic, religious or sexual characteristics of a group or individual (Belavusau), the former, we argue, corresponds to the intellectual sources -- political, scientific, social and otherwise -- that have justified and contributed to such discriminating attitudes. This includes, for example, the (pseudo) scientific branch known as ‘scientific racism’, which until around the time it was formally denounced in 1950 by UNESCO in the anti-racist statement ‘The Race Question’, has actively produced research on race as an object of scientific concern, particularly on the correlation between race and IQ, the classification of human populations into physically discrete races and craniometry and physical anthropology.

The use of terms originating from these intellectual sources rather than actively discriminating ‘hate speech’ terms, then, poses a challenge for both computational hate speech detection and manual, close-reading analysis. A historical approach to detecting hate speech defies the understanding that there is “a simple antonymous conception of hate speech versus acceptable speech” (Pohjonen and Udupa, 1173). Rather than calling for a detection of hate speech at face value, it introduces a need to contextualise it within larger “online debates with an attention to [...] particular histories of speech cultures” (Pohjonen and Udupa, ibid). Notions associated to racism, for example, depart from a long intellectual history that have fed into the history of far-right political thought, particularly its conceptions of ethnic and racial nationalism.

Hence, our report departs from a historical understanding of hate speech, namely by identifying key terms in radical right-wing discourse -- that is: those namely, terms political publics they are often radicalised about, such as ‘race’ and ‘jew’ -- and then tracing their evolution over time. In it, we explore how terms traditionally defined or associated to ‘hate speech’ have evolved overtime, and whether, through them, we can detect the re-emergence of ideas associated to effectively racist intellectual sources -- namely, scientific racism -- in far-right YouTube publics. We will define this move from ‘hate speech’ to intellectual, 'pseudo-academic language’ as a form of ‘radicalisation’ in its own right. We thus pose the following question: how has language referring to ‘race’ and jewish people (‘jew’) evolved from 2006 to 2018 in right-wing channel transcripts and comments? Do any conceptual evolutions of these terms illustrate a process of radicalisation?

The meshes between hate speech, 'alternative knowledge' and post-truth

The elaboration of these ‘alternative’ sources of ideas and knowledge in videos and comments have posed a notable challenge specifically to YouTube. While the policy guidelines of YouTube are pretty clear on what constitutes ‘direct racism and threats’, ‘intellectual arguments’ about, for example, race and intelligence, cannot be simply censored. Given their proximity with the sciences -- biology, taxonomy, anthropology -- these ideas have been defended by far-, alt- and/or new-right publics thesetheir visions often as sources of what constitutes as sources of legitimate or ‘good’ knowledge in their own right (Lyotard). Were it to aims to ban this type of speech, YouTube would have to become an arbiter of what constitutes a valid argument -- or, even more problematically, 'Truth' itself.

The problem of the ‘intellectualisation’ of hate speech on YouTube, then, ties back to the larger questions surrounding our current post-truth condition. Following a Digital Methods approach, one has to understand that when observing social phenomena through the lenses of the platform, one should always take into account the fact that the social is intricately linked with the medium (Rogers 1). As argued by McIntyre and Kautani, while online platforms have included a more diverse array of actors, they have equally contributed to destabilizing knowledge authorities (McIntyre; Kakutani).

Taking this claim a step further, the philosopher Fuller argues that these platforms have destabilized the concept of knowledge altogether. As Fuller points out, rhetoric and Truth, while in the modern scientific age treated as separate disciplines, used to be considered intrinsically linked, e.g. as argued by Plato and his mentor Socrates. Both ancient philosophers understood that the way truth was being communicated was equally as important as what constituted Truth. Fuller argues that the age of entertainment media and internet has shown, again, how intricately linked rhetoric is with the concept of Truth. The post-truth condition is then not only, as is often thought, an inability to think rationally. The post-truth condition then implies that platforms such as YouTube have a distinct way of offering users the tools and affordances to articulate their own ‘truths’. This is not a normative, relativistic argument proposing that one could argue for all sorts of claims in a just way. It merely serves to show that knowledge is to a certain extent built by the epistemological and rhetorical affordances of the online spaces where it is developed and disseminated. The rise and influence of an intellectual movement such as the the so-called ‘Intellectual Dark Web’ should, therefore, be understood with a specific idea of how certain ideas are constructed and disseminated with respects to YouTube 's affordances. This would imply examining affordances allowing for intellectual debates, such as commenting spaces and their relation with the videos to which they are embedded. Our project is set against the background of this mediation of knowledge.

2. Methodology, data sets and research questions

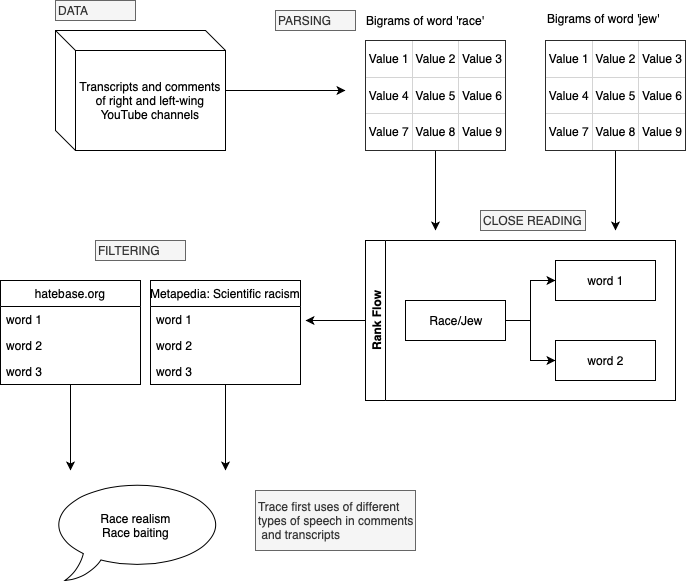

Given that our historical was primarily geared towards assessing the evolution of concepts associated to scientific racism, the aim of our research has been to examine how termslanguage related to the words ‘race’ and ‘jew’ (bigrams) havehas evolved from 2006 to 2018 in right-wing channel transcripts and comments. For a comparative analysis, we have also looked into the left-wing comments and transcripts from this period for a comparative analysis.

In order to make our case, we have utilized different datasets. Firstly, our YouTube dataset was compiled by Dimitri Tokmetzis, an extremist expert and journalist from De Correspondent. The particular dataset included transcripts and comments of predetermined right-wing and left-wing channels on YouTube from 2006 to 2018. In order to identify the right-wing and left-wing channels, Dimitri Tokmetzis drew from a combination of expert established a lists by of extreme organizations through the use of sources like Kafka (which is a Dutch anti- fascist organization), as well as from information provided by Wikipedia and academic literature (Tokmetzis). From there on, the focus was set on European organizations as well as some recognized YouTube channels in the United States that were referred to in sources like news sources and scholarly work. Furthermore, a snowballing technique was used to identify extremist channels through their subscriptions and featured channels.

Secondly, we needed to determine the two types of speech we were interested in tracing the evolution of: ‘hate speech’ and scientific racism. In order to determine a shift in language over time, we relied on two experts lists. For the former, we have relied on (1) English-speaking terms provided in hatebase.org. For the latter, we have relied on (2) frequent words mentioned in Metapedia’s page on Race and Intelligence. Hatebase.org defines itself as “the world's largest structured repository of regionalized, multilingual hate speech” (Hatebase.org) and thus served as an expert list of hate speech terms. Metapedia, on the other hand, is an alternative right-wing, anti-semitic and fascist wiki that encaptures many of the new linguistic terms used by the far-right publics (Arnstad). Metapedia defines itself as an ‘online encyclopedia that focuses on culture, art, science, philosophy and politics’, specifically for ‘pro-European activists’ -- ‘European’, here, referring to ideas of European civilisation, culture and ethnicity (Metapedia). Given its political inklings, Metapedia expresses support for the idea that there exists a correlation between race and IQ, generally reflecting a positive view of theories associated to scientific racism (de Keulenaar et al., 2018). In order to locate terms specifically related to scientific racism, we extracted frequent terms from Metapedia’s page on Race and Intelligence and used it as an example list of terms belonging to scientific racism., i.e., an intellectual tradition that regards race as an object of scientific concern. Through the use of these two lists, we could operationalize shifts within far-right discourse from hate speech (as defined by hatebase.org) to terms that related to scientific racism (as drawn from Metapedia) in YouTube transcripts and comments.

The way we have inquired our dataset is by obtaining and contextualisingclose-reading bigrams of two of the terms that were hypothesized asto havinge changed in their use over time in YouTube transcripts and comments in previous research: ‘race’ and ‘jew’ (Tokmetzis et al., 2019). This way, we could extrapolate the associative strength of terms closely related to these two words.

Associated words were then relocated on three different levels. First, we observed the first uses and development of the words associated with ‘race’ over the entire duration of our dataset. Then, we looked at the ways the term ‘race’ was discussed in right-wing channels, zooming in on the term ‘race -realism’ in transcripts and comments from 2010 to 2018. And finally, as an example, we contextualized a significant term: ‘race baiting’, tracing back the evolution and appropriation of the definition in our datasets and in popular compendia of hate speech vernaculars, such as the Urban Dictionary.

Our method

5. Findings

Our findings can be summarised within five key observations: (1) the increase in ‘intellectualised’ language associated to discussions about ‘race’, (2) the term ‘race’ becoming an important topic of discussion within right-wing comments and transcripts, (3) the presence of contestations from users regarding misuses of term race in left-wing videos, as exemplified by an increased use of the term ‘race-baiting’;(4) examples of the politically contingent meaning of the term ‘race-baiting’ in transcripts, comments and external dictionaries; and (5) usages of the term ‘jew’ distinct from those of the word ‘race’.A. The increased 'intellectualisation' of race-related language

As shown in Figure 2, the development of terms strongly associated to the word ‘race’ in right-wing comments and transcripts. Of these terms, as is indicated in green, there is a significant increase in terminology related to scientific racism after 2015. Furthermore, as is indicated in purple, there is a proliferation of terms referring to misuses of the word ‘race’ (‘race baiting’), i.e. implying that people on either side of the political spectrum are using the concept of race as gateways to their political agenda. This is indicated by words such as ‘race bait’, ‘race hustler’, ‘race card’, etc. There is a relatively little presence of hate speech (as defined by hatebase.org) in the visualization, as shown in red. This may have to do with the fact that YouTube monitors most hate speech terms by banning them, or that the hatespeech.org database was not exhaustive enough to encompass vernacular hate speech language proper to YouTube comment sections or general Internet lingo.

Figure 1. Frequency of words associated to scientific racism (green), hate speech (red) and allegations of misuses of the term 'race' (e.g., 'race baiting', in purple)

B. 'Race' as an important topic of discussion in right-wing comments and video transcripts

Figure 2, below, presents a visualisation of the different video formats uploaded by right-wing channels using the word ‘race realism’. First, we obtained all the right-wing channels having spoken of ‘race realism’ within their transcripts. Then, we obtained the video thumbnails containing the words ‘race realism’ in order to classify videos based on the types of activities portrayed in the thumbnails, their correspondent video titles and length conveyed. The y-axis represents the number of videos, while different colors represent different types of activities. We observe an increase, indicated in light orange, of activities related to intellectual discussions, as lengthy, argumentative and intellectual content have gained more importance. This, arguably, shows that ‘race realism’ is conceived as an important topic of discussion; a topic that calls for an online (public) debate in right-wing videos and comments.

Figure 2. Types of activities involved in right-wing videos mentioning the term 'race realism'

Figure 3, below, visualizes the presence of the word ‘race realism’ in both transcripts and comments from right-wing channels. We observed that it first appeared in right-wing transcripts around 2011 and only appeared significantly later in right-wing comments in 2016. Also during 2016, it is the video content, rather than user comments, that appears to initiate the conversation on race realism. This suggests that discussions on race, possibly encouraged by the content of the right-wing videos, are followed in the comments where, accordingly, scientific terms were being debated.Figure 4. Presence of the word ‘race realism’ in right-wing transcripts and right-wing comments overtime

C. 'Race baiting' has become a key topic in discussions involving the term 'race' in both left-wing and right-wing comments

We observed within the data that the concepts ‘race card’ and ‘race-baiting’ were frequently mentioned in both right-wing and the left-wing dataset. We thereby performed a close-reading to understand why and how these terms were being used. We have was found that in both spheres, it was mostly right-wing users who used the term ‘race-baiting’ in comment sections to accuse liberals of misusing the topic of race, by, e.g., bringing it into public debate deliberately and sentimentally.

Our observation was backed by that fact that the word ‘race-baiting’ rarely appeared in left-wing transcripts, but was instead predominantly present in comment sections (Figure 4). This came to our attention when we extracted the most frequent words associated to ‘race-baiting’ in comments responding to left-wing videos. The words most closely associated to ‘race baiting’ or ‘race bait’ were, for instance, ‘race-baiting TYT [The Young Turks, a progressive left-wing YouTube channel]’, ‘race-baiting people’, ‘race-baiting shit’, ‘race-baiting bullshit’, and ‘race-baiting media’. TYT was in fact the channel most critiqued for ‘misusing’ the term ‘race’ as if to make their news reporting and commentaries more sensationalistic. This finding has further suggested that opposing commenters were actively commenting left-wing videos.

Figure 5. Race-related terms in comments and transcripts in left-wing channels. Above, terms most strongly associated to the terms 'race baiting' in left-wing transcripts (videos); below, the equivalent in comments responding to those videos.

Most significant, however, is the finding that the term ‘race baiting’ appears to have set a precedent to discussions subscribing to race realism in right-wing videos. The usage of the term ‘race baiting’ suggests first and foremost that the term ‘race’ has been spoken about on YouTube not so much for what it means, but more so for how its usage demarcates the growing enmity between different YouTube publics. While discussions about race increased steadily since 2008 in the context of debates about ‘gender’, ‘ethnic[ity]’, ‘race relations’, ‘race and religion’, ‘race and class’, ‘race issues’ or ‘racism’, comments left in left-wing videos show that commenters take a generally defensive stand, accusing the videos of mislabeling themselves or subjects mentioned in the videos as being racist. Given that terms related to ‘race baiting’ have preceded those associated to scientific realism, we can question whether this atmosphere of antagonism has set the stage for actively racist points of view to gain prominence, as happened in 2017 with the resurgence of content subscribing to scientific racism.

D. The ephemeral meanings of the term 'race baiting' -- from the official to the vernacular

The contingent and primarily partisan usage of the term ‘race baiting’ in our YouTube dataset has led us to question whether it is also used in the same way outside of YouTube. By close reading left and right-wing comments having mentioned the term ‘race baiting’, we have seen that there has been a contestation of the term. Initially referring to a racist act, the term was later used to signify the complete opposite -- that is, to refer to the phenomenon of misusing of the term ‘race’ for political motives.

In an attempt to contextualize the term ‘race baiting’ by querying earlier usages in the literature, we have found that the definition of the term ‘race-baiting’ has significantly changed in the last few decades. Through Google Ngram, we found that the term appeared earlier in a Billboard Magazine Ad from 1943 and referred to a pejorative word targeting ethnic minorities.

An example of an early usage of the term ‘race baiting’, found here in a Billboard Magazine ad from 1943 via Google Ngram

Currently, however, authoritative dictionaries e.g. Merriam Webster define the term as “the making of verbal attacks against members of a racial group”.

An example of a ‘mainstream’ definition of the term ‘race baiting’, as found in the Merriam-Webster dictionary dating from 2019

Meanwhile, the open-source and largely vernacular Urban Dictionary (which contains user-generated, ‘grassroots’ definitions, shedding light on vernacular definitions of terms circulating the Internet) suggests otherwise. Contrary to Merriam-Webster’s definition, the top definition of 2019 defines reflects what users meant by ‘race baiting’ when critiquing left-wing channels, as illustrated above: “an attempt to cloud logic and facts by appealing to emotion through false accusations of racial discrimination”.

The Urban Dictinary’s current top definition for the term ‘race baiting’ (January 2019)

F. Uses of the term 'jew' as a marker of radicalisation?

Finally, in an attempt to zoom in on how language was used to describe ethnic minorities, we decided to equally investigate uses of the term ‘jew’. Our hypothesis was that, as a marker of radicalization, the intellectualisation of the word ‘jew’ would coincide with the one seen with ‘race’. By analysing both datasets of right-wing and left-wing YouTube comments and transcripts, we set out to visualize the evolution of terms associated with ‘jew’ and show the growth in frequency of the terms over time. We found out that most terms were still related to vulgar hate speech inciting violence (‘jew hatr’, ‘termit’, ‘kill’, destruction’), religion and ethnicity (‘christian’, ‘gentil’, ‘muslim’), and power (‘control’). There was no increase in terms related to scientific racism, showing that an ‘intellectualisation’ of the term did not take place. In the light of these findings, our initial hypothesis could not be supported.

Figure 6: Word discrepancies associated with the word ‘jew’ in right and left transcripts

Figure 7: Word discrepancies associated with the word ‘jew’ in right and left comments

Still, we have found that the usage of the term ‘jew’ increased overtime in our right-wing dataset. Transcripts referred to the term mostly as a concept association to religion and ethnicity, while comments referred to it mostly to terms inciting immediate violence (‘kill’, ‘destruction’, ‘disappearance’) and to Jewish-specific customs.

6. Discussion

The above-mentioned findings confirm that there has been a change in online vernacular associated with the concept ‘race’. There has been a process of moving from ‘hate speech’ to 'pseudo-academic language’ related to scientific racism. This can be observed both in terms of the associative strength of ‘race’ with concepts such as ‘realism’ or ‘realists’, as well as the longer ‘discussion’ formats concerning the ‘truth about race’. Ideas that have historically and politically been identified as racist are re-emerging as scientifically valid, rational arguments.

The online attempts to redefine what constitutes an ‘honest discussion about race’ fits Fuller’s theory of our post-truth condition: what is considered ‘knowledge’ is being reframed and destabilized within specific online communities. Communities such as the far-right develop a new epistemology mediated by the rhetorical affordances of online spaces, both consciously and subconsciously. For instance, online encyclopedias and dictionaries are tools and affordances which are being appropriated to articulate ‘truth’. Whereas the ‘mainstream’ authorities see race-baiting as addressing racism, the far-right has appropriated this term to signify a logical fallacy i.e an obstruction of their rationalist pursuit of ‘truth’. The post-truth condition is not merely the dumbing down of an audience that has lost the ability to understand ‘real’ from ‘fake’; it is the ‘sophisticated’ task of appropriating the tactics of intellectuals and their methods/ settings in order to make a political point. YouTube is a space wherein different publics organise, process and articulate their own knowledge, with their own criteria as to what makes it legitimate. In this way far-right groups oppose the increasingly illegitimate 'mainstream', 'politically correct' knowledge about terms such as ‘race’ and ‘jews’. One effective way of this ‘destabilization of knowledge’ is by weaponizing the rhetorical terms in the post-truth age, in which Truth and rhetoric have become more intertwined. It is precisely this battle for the online linguistic territory that becomes clear from our research.

Finally, it bears repeating that ours is a descriptive claim about the online inner-workings of the far-right. A post-truth epistemology does not necessarily imply a relativistic outlook, nor does it not argue that truth itself can be defined in any other way. It, however, does prove the need to contextualize and historicize the methods of framing ‘truth’. It is therefore crucial, as this report set out, to trace these ideas back to their heritage, e.g. the intellectual heritage of scientific racism, so that one can contextualise it as such. It is precisely with this effort to contextualize language defined as ‘hate speech’ that the dangers of the re-emergence of such vernaculars on platforms such as YouTube become clear, even if in their older intellectual format.

Limitations

An initial limitation of our report is the extent to which our terminology database encapsulates all the necessary terms. It already became clear that concepts identified as ‘hate speech’ did not appear frequently within the comments. In addition, right-wing extremists often use coded or hidden language. For instance, triple parentheses (echo brackets) and the term ‘globalist’ serve as dog-whistles by extremists groups to signal that something is of jewish characteristic without explicitly using the term ‘jew’ (Mickiernan). This also would explain the disparity between the online venacular of the concepts ‘race’ and ‘jew’.

Furthermore, our database has been purely linguistic. Visual language such as memes are also often used to convey extremist behaviours. They are especially produced and used on the so-called ‘deep vernacular web’, in platforms such as 4chan (Keulenaar). These remarks all drive home the point that markers of radicalization online are hard to detect. As Saleem et al. point out, hate speech consists of prevailing social norms, context, and individual and collective interpretation, which all together makes it challenging to establish a linear method of how to detect hate speech.

Secondly, expediting on the radical vernacular on 4chan, our project only focused on a single platform: YouTube. This presents a limited frame as many hate speech vernaculars, while not often familiar to outsiders, originate on platforms such as 4chan (Tuters and Hagen). A cross-platform analysis would perhaps allow the inclusion of other relevant datasets in order to get a more comprehensive representation of intellectualised hate speech. It might then also, in line with the mainstream popularity of figures such as Jordan Peterson, be relevant to look into the mainstream perception and spread of these ideas. In other words, these linguistic terms can be traced in a multitude of ways.

A final limitation is a computational one, considering the fact that the dataset was considerable in volume and that we relied on small samples of the findings to perform the close-reading. While these samples gave us a clear direction and intent of speech, our findings could be strengthened with a more thorough investigation of a larger sample as it might give more solid results.

7. Conclusions: the needs for new analycal methods to dispute ancient ideas

Our research started from the assumption that ‘hate speech’ on YouTube has been radicalized, therefore posing the question: how has language referring to ‘race’ and jewish people (‘jew’) evolved from 2006 to 2018 in right-wing channel transcripts and comments? After this research, we can argue that YouTube content and comment spaces in right-wing and left-wing channels have indeed significantly changed over time. YouTube ’s far-right channels, often presented as a space where extremist behaviours, vulgar language, and hate speech occurs, have shown significant signs of intellectualised extremism. As we have observed in this project, attitudes and opinions about race are evolving into scientific discussions.

This may dispute the notion -- often implicit in analyses about the relationship between social media and post-truth -- that social media is a space where political language tends to simplify into vulgar, violent, or extreme vernaculars. While this has certainly been confirmed (Oilab, 2018), this project goes to show that certain types of language are not explicitly vulgar but tie back to intellectual corpuses of thought which, we may say, have laid the conceptual foundations for discriminatory attitudes against certain 'identities'. This is particularly the case with scientific racism. Violent dispositions against certain groups are not so much volatile expressions of untamed feelings of violence, but are partly the by-products of old intellectual attitudes and bodies of thought that, as we have seen, have resurged in contemporary, public political discourses online.

The fact that specific types of language inciting radicalization and violence is resurfacing in public discussions online demands further studies on current political trends through the lense of new media. Other than relying on historical, sociological, and economic methods to identify such radicalisation, the use of computational and natively digital tools can provide a more relevant analysis of the contemporary languages used to describe current political contexts. Current political trends can equally be diagnosed with respects to the concepts contemporary political languages use to describe their present times, as well as to what (intellectual) history -- what intellectual paradigms -- they belong to.

8. References

Arnstad, Henrik. “Ikea Fascism: Metapedia and the Internationalization of Swedish Generic Fascism.” Fascism (2015) 4(2): 194–208.

Blue’s Reply on Negroes. Billboard Magazine, 6 February 1943, 6.

Brooks, David. “The Jordan Peterson Moment” The New York Times. 25 January 2018. 17 January 2019. https://www.nytimes.com/2018/01/25/opinion/jordan-peterson-moment.html.Lewis, Rebecca. “Alternative Influence: Broadcasting the Reactionary Right on YouTube ”. Data & Society. 18 September 2018. 17 January 2019.

https://datasociety.net/output/alternative-influence/

Room for Discussion. “Lustrum: Jordan Peterson on Society in Crisis”. Room for Discussion. 16 January 2019.

https://roomfordiscussion.com/interview/lustrum-jordan-peterson-on-society-in-crisis/

Kakutani, Michiko. The Death of Truth. New York: Harper Collins, 2015.

McIntyre, Lee C. Post-Truth: MIT’s Essential Knowledge Series. Cambridge MA: MIT Press, 2018.

Mickiernan, Jared. “Q+A: EX-NEO-NAZI CHRISTIAN PICCIOLINI”. 20 December 2017. 17 January 2019. http://caringmagazine.org/qa-ex-neo-nazi-christian-picciolini/.

“Race-baiting”. Merriam Webster. 10 January 2019. https://www.merriam-webster.com/dictionary/race-baiting.

“Race-baiting”. Urban Dictionary. 10 January 2019. https://www.urbandictionary.com/define.php?term=Race-baiting.

Rebel Media. “Prof. Jordan Peterson: “Bloody neo-Marxists have invaded the campuses"”. YouTube. 5 November 2016. 17 January 2019.

https://www.youtube.com/watch?v=X_Kfm_qWpN0.

Folia. “‘Room for Discussion, zet een extra gast naast Jordan Peterson’”. Folia. 25 October 2018. 16 January 2019.

https://www.folia.nl/opinie/124762/room-for-discussion-zet-een-extra-gast-naast-jord

Saleem, Haji Mohammad, et al. ‘’A Web Of Hate: Tackling Hateful Speech In Online Social

Spaces.’’ arXiv preprint arXiv:1709.10159 (2017).

Tuters, Marc and Hagen, Sal. ‘’Who are (((they)))?: On Online Hate, Tasteless Transgression, and Memetic Versatility’’. OILab. 30 August 2018. 18 January 2019. https://oilab.eu/who-are-they-on-online-hate-tasteless-transgression-and-memetic-versatility/

Keulenaar, Emillie V. “Freedom and taboos in the international ghettos of the web”. OILab. 20 April 2018. 17 January 2019. https://oilab.eu/freedom-and-taboos-in-the-international-chanosphere/

Weiss, Bari. “Meet the Renegades of the Intellectual Dark Web”. The New York Times. 8 May 2018. 17 January 2019.

https://www.nytimes.com/2018/05/08/opinion/intellectual-dark-web.html.

Rogers, Richard. Digital Methods. Cambridge, MA, USA: MIT Press, 2015.

Pohjonen, Matti, and Sahana Udupa. ‘’Extreme speech online: An anthropological critique of hate speech debates’’ International Journal of Communication, 11 (2017): 19.

Lyotard, Jean-François. 1984. The Postmodern Condition: a Report on Knowledge. Manchester. Manchester University Press.

Copyright © by the contributing authors. All material on this collaboration platform is the property of the contributing authors.

Copyright © by the contributing authors. All material on this collaboration platform is the property of the contributing authors. Ideas, requests, problems regarding Foswiki? Send feedback